CSCI 567: Machine Learning

Basic Information

- Lecture time: Fridays 1:00 pm to 3:20 pm, followed by discussion from 3:30 pm to 4:20 pm

- Lecture place: SGM 124

- Instructor: Vatsal Sharan (vsharan)

- TAs: Yavuz Faruk Bakman (ybakman), Robby Costales (rscostal), Xiao Fu (fuxiao), Sampad Mohanty (sbmohant), Duygu Nur Yaldiz (yaldiz), Grace Zhang (gracez), Mengxiao Zhang (zhan147)

- Course Producers: Ashwini Ainchwar (ainchwar), Kriti Asija (kasija), Aman Bansal (amanbans), Garima Dave (garimada), Sai Anuroop Kesanapalli (kesanapa)

- Office Hours: Available on this calendar: Google calendar.

- Communication: All inquiries which do not pertain to a specific member of the course staff should be sent via ed Discussion (see below). USC email-ids of all staff members are in parantheses above.

- ed Discussion: We will be using ed for all course communications (regarding homework, project, course scheduling, etc). Please feel free to ask/answer any questions about the class on ed. You can post privately on ed to contact the course staff for any reason. You should be enrolled in Ed automatically.

- Gradescope: We will use Gradescope for assignment and final project submission. You will be enrolled in Gradescope automatically.

Course Description and Objectives

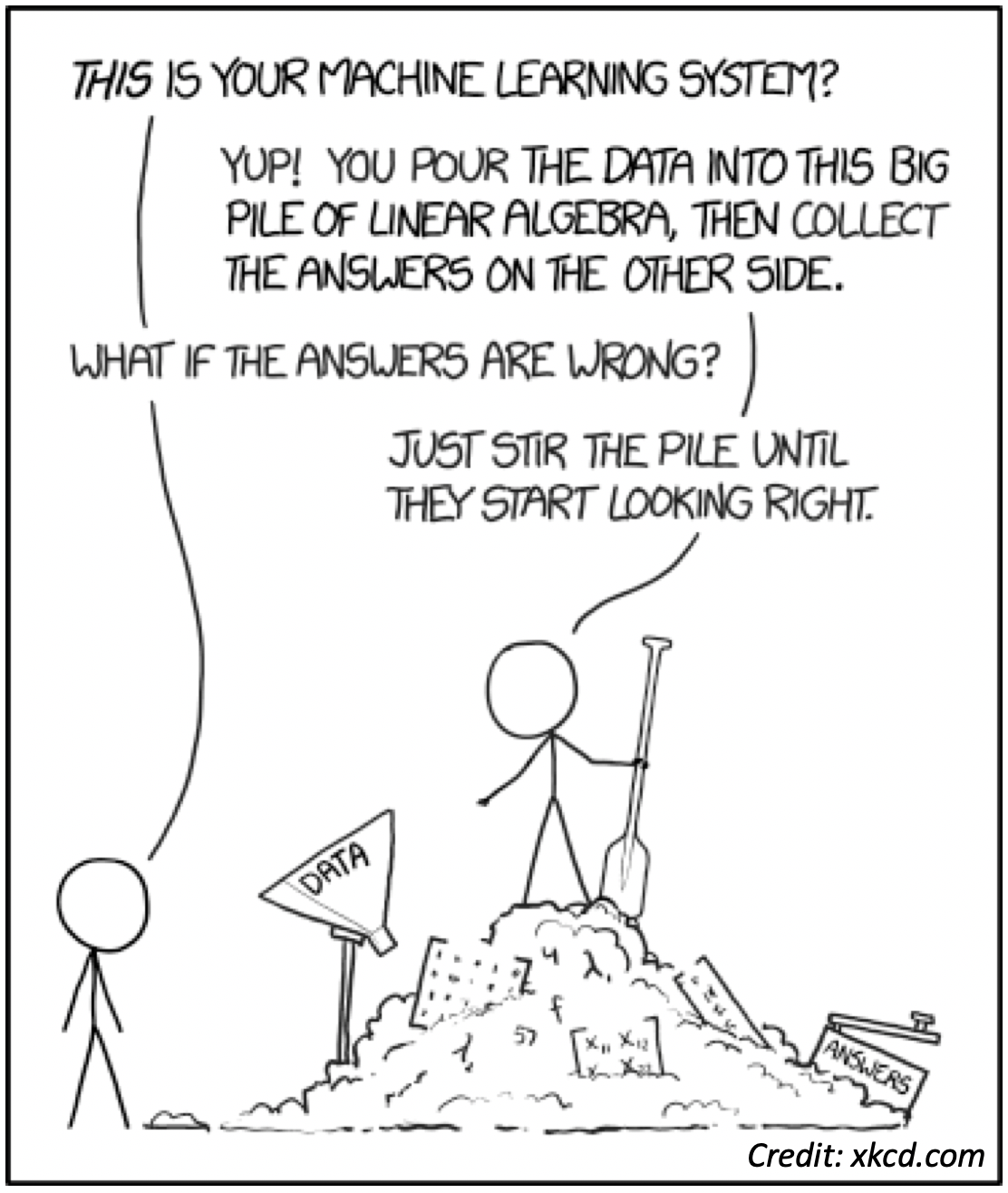

Is this what we'll learn to do in this class? Or is this what we'll learn not to do? 🤔🤔🤔

The chief objective of this course is to introduce standard statistical machine learning methods, including but not limited to various methods for supervised and unsupervised learning problems. Particular focus is on the conceptual understanding of these methods, their applications, and hands-on experience.Prerequisites

(1) Undergraduate level training or coursework on linear algebra, (multivariate) calculus, and basic probability and statistics;(2) Basic skills in programming with Python;

(3) Undergraduate level training in the analysis of algorithms (e.g. runtime analysis).

Syllabus and Materials

The following is a tentative schedule. The exam timings are fixed, but the rest of the content will likely change as the course continues. We will also post lecture notes and assignments here.Please refer to Ed Discussion for recommended readings.

| Date | Topics | Lecture notes | Homework/ Practice problems |

|---|---|---|---|

| 1/12 | Lecture 1: Introduction, Linear regression; Optimization algorithms Discussion: Linear algebra review |

Lecture slides, Optimization Colab Discussion slides |

Linear algebra questions I |

| 1/19 | Lecture 2: Linear classifiers; Perceptron; Logistic regression Discussion: Probability review |

Lecture slides, Optimization Colab Discussion slides |

Probability questions HW1, HW1 solutions |

| 1/26 | Lecture 3: Generalization; Nonlinear basis; Regularization Discussion: Linear algebra review |

Lecture slides, Nonlinear functions Colab Discussion notes |

Linear algebra questions II |

| 2/2 | Lecture 4: L1 regularization; Kernel methods Discussion: Linear algebra & Numpy review |

Lecture slides Discussion notes |

|

| 2/9 | Lecture 5: SVM Discussion: HW1 review | Lecture slides, SVM Colab | HW2, HW2 solutions |

| 2/16 | Lecture 6: Multiclass classification; Neural Networks Discussion: Problem discussion for Exam 1 | Lecture slides |

Practice problems, Practice problems (solution) |

| 2/23 | Lecture 7: Optimization for neural networks, CNNs Discussion: HW2 review | Lecture slides, Discussion notes | |

| 3/1 | Lecture: Exam 1 📝 No Discussion session | ||

| 3/8 | Lecture 8: Language modelling, Markov models, RNNs, Attention Discussion: Project overview | Lecture slides, Discussion notes | HW3, HW3 solutions |

| 3/15 | Spring break ☀️ | ||

| 3/22 | Lecture 9: Transformers; Decision trees; Ensemble methods Discussion: LLMs and foundation models | Lecture slides, Discussion notes | |

| 3/29 | Lecture 10: Boosting; Dimensionality reduction and visualization; PCA Discussion: HW3 review | Lecture slides, Discussion notes | HW4, HW4 solutions |

| 4/5 | Lecture 11: Clustering; k-means; Gaussian mixture models; EM Discussion: Evaluation metrics (precision, recall etc.) | Lecture slides, Discussion notes | |

| 4/12 | Lecture 12: Density estimation; Generative models & Naive Bayes; Multi-armed bandits Discussion: HW4 review | Lecture slides | Practice problems, Fall 2022 exam |

| 4/19 | Lecture 13: Reinforcement Learning; Responsible ML, Fairness, Robustness, Privacy Discussion: Problem solving for Exam 2 | Lecture slides | Practice problems (solutions) |

| 4/26 | Lecture: Exam 2 📝 No Discussion session | ||

| 5/6 | Project report due 📕 |

Requirements and Grading

- 4 homeworks worth 40% of the grade. The homeworks will be a combination of theoretical and exploratory programming questions. They should be done in groups of 2. Two late days will be available to every student for the homeworks. If a group submits one day late, one late day will be substracted from each group member. A maximum of one late day can be applied to any homework.

- Two exams during class hours worth 20% each. The exams will test conceptual understanding of the material covered in the lectures, discussions and assignments.

- A course project worth 20%. The project should be in groups of 4 students. More information will be released later.

- Contributions to the class (Discretionary Grade Bumps): You are encouraged to help your fellow classmates when possible and improve everyone's learning experience, such as by responding to Ed Discussion questions when you know the answer. At the end of the course, we will bump up grades of those students who had the most positive impact on the class, according to the (quite subjective) judgement of the course staff.

Resources and related courses

- There is no required textbook for this class, but the following books are good supplemental reading for many parts.

- Probabilistic Machine Learning: An Introduction [PML] by Kevin Murphy. Available online here.

- Elements of Statistical Learning [ESL] by Trevor Hastie, Robert Tibshirani and Jerome Friedman. Available online here.

- Pattersn, Predictions, and Actions: A story about machine learning, by Moritz Hardt and Benjamin Recht. Available online here.

- (for more of the theory) Understanding Machine Learning: From Theory to Algorithms, by Shai Shalev-Shwartz and Shai Ben-David. Available online here.

- This course draws heavily from several other related courses, particular the previous iteration of this class by Prof. Haipeng Luo and other USC faculty:

Helpful reminders

Collaboration policy and academic integrity: Our goal is to maintain an optimal learning environment. You can discuss the homework problems at a high level with other groups, but you should not look at any other group's solutions. Trying to find solutions online or from any other sources for any homework or project is prohibited, will result in zero grade and will be reported. To prevent any future plagiarism, uploading any material from the course (your solutions, exams etc.) on the internet is prohibited, and any violations will also be reported. Please be considerate, and help us help everyone get the best out of this course.

Please remember the Student Conduct Code (Section 11.00 of the USC Student Guidebook). General principles of academic honesty include the concept of respect for the intellectual property of others, the expectation that individual work will be submitted unless otherwise allowed by an instructor, and the obligations both to protect one's own academic work from misuse by others as well as to avoid using another's work as one's own. All students are expected to understand and abide by these principles. Students will be referred to the Office of Student Judicial Affairs and Community Standards for further review, should there be any suspicion of academic dishonesty.

Students with disabilities: Any student requesting academic accommodations based on a disability is required to register with Disability Services and Programs (DSP) each semester. A letter of verification for approved accommodations can be obtained from DSP. Please be sure the letter is delivered to the instructor as early in the semester as possible.